Guide to assessment and recording assessments

A guide to assessment and recording assessment (2020 update).

A guide to assessment and recording assessment (2020 update).

There are many options for assessment methods that panels can use. This guide has been developed to help find the appropriate methods to suit the criteria being assessed. It should help panels to carry out this assessment in a fair and transparent way which will assist with the attraction of the optimum pool of people who fit the person specification.

The materials in this guidance have been developed over 15 years and have drawn on good practice guides produced by well-respected organisations in the field of recruitment and selection such as the Chartered Institute of Personnel and Development (CIPD), Pearn Kandola and, more recently, Applied, a spin-off of the UK Government’s Behavioural Insights Team.

This guide assumes that the reader is aware of and has read the Code of Practice for Ministerial Appointments to Public Bodies in Scotland (the Code) and the supporting statutory guidance.

The guidance has been split into discrete sections below for ease of reference.

Here are some extracts from the Code that should help to explain what’s anticipated when designing assessment methods:

The purpose of the process is to attract a diverse range of able applicants and appoint the most able to lead Scotland’s public bodies in the delivery of efficient and effective public services.

The purpose of the Code is to provide the framework that enables the Scottish Ministers to attract and appoint the most able people in a manner that meets the requirements of the Act.

The Code principles:

Merit

All public appointments must be made on merit. Only persons judged best able to meet the requirements of the post will be appointed.

Integrity

The appointments process must be open, fair and impartial. The integrity of the process must earn the trust and have the confidence of the public.

Diversity and Equality

Public appointments must be advertised publicly in a way that will attract a strong and diverse field of suitable candidates. The process itself must provide equality of opportunity.

The public appointments process will be outcome focused and applicant focused.

PLEASE NOTE:

As these are public appointments they must be fair and be demonstrably fair.

The purpose of the assessment is to identify the applicant who is most able to meet the requirements of the board at that point in time.

Most able (or merit) is determined by the appointing minister at the start of an appointment round.

Those needs are defined in the person specification which is published in the applicant information pack. It should explain in transparent terms what most able looks like. It is usually a mix of skills, knowledge, experience and personal qualities such as values. Each criterion for selection should have indicators that describe what meeting it/good evidence looks like.

Further help and guidance on defining most able is available in the core skills framework.

Once most able has been defined using the core skills framework, all assessment should be based on identifying the applicant who most closely matches this definition.

AT NO POINT SHOULD ANY OTHER FACTOR BE TAKEN INTO ACCOUNT AS PART OF THE ASSESSMENT. This not only includes consideration of additional skills, knowledge, experience or values that the person might highlight during any part of the assessment that were not included in the original definition, but also any enhanced level, length of experience or recency of applying the skill, knowledge, experience or personal quality over and above that stated in the application pack.

Additionally, in order to be deemed most able, people need to meet the fit and proper person test as defined in paragraph E6 of the Code and clarified further by section 7 of the guidance.

Application and assessment methods should be chosen because they have validity. A simple description of the different types of validity is set out below.

A note about validity: Validity is increased when indicators are used to describe what good evidence of a criterion being met will look like. It is decreased when indicators are used but they do not do this. In summary indicators should include:

(Based on / taken from Pearn Kandola’s research on behaviourally anchored rating scales (BARS)).

Further information and examples can be found in the Core skills framework.

Predictive validity is the extent to which the form of assessment will predict who will perform effectively in the role.

Pilbeam & Corbridge (2006) provide a summary of the predictive validity of selection methods based on the findings of various research studies.

70% | Assessment centres for development |

60% | Skilful and structured interviews |

50% | Work sampling |

40% | Assessment centres for job performance |

30% | Unstructured interviews |

10% | References |

0% | Graphology |

An earlier meta study of assessment techniques showed that the use of work samples (ie simulations of the task to be performed) were most effective. Latter studies have indicated that a mix of methods is most effective. The CIPD website includes more information on different methods and their application. There are also resources on the Applied website on the design of appropriate assessment methods based on behavioural science.

Assessment centres involve a number of different assessment exercises cumulating in an overall assessment of the individual assessing different aspects with each exercise and therefore provide a good indication as to future performance in the role.

IN ALL CASES the form of assessment chosen must be considered in line with all forms of validity.

Face validity is the extent to which the applicant considers the form of assessment to be credible and/or acceptable to the applicant pool.

For example, those applying for a chair role may not feel that a group exercise whereby they were assessed alongside other applicants for the same role was a credible or acceptable form of assessment even though it may have a measure of predictive validity.

IN ALL CASES the form of assessment chosen must be considered in line with all forms of validity.

Content validity concerns whether an assessment method assesses the attribute sought, as opposed to something else, and the extent to which it assesses it.

For example, if I am being appointed, because of an area of specific expertise, such as effective oversight of large scale capital expenditure projects, is it necessary for me to give a presentation to a selection panel? If I am poor at delivering presentations then the panel may confuse this with a lack of expertise. Equally, if questioning on my area of expertise is superficial, the assessment will lack validity. This is why the Code of Practice makes specific reference to the use of expert panel members.

IN ALL CASES the form of assessment chosen must be considered in line with all forms of validity.

There are many ways in which applicants can be assessed and the Code has been designed with flexibility to both enable and encourage the use of varied and different forms of assessment.

Most appointment rounds will make use of an initial stage of assessment in the form of a written application, followed by a final stage of assessment involving some form of face to face assessment with the panel (often an interview). The Code does not require this and it is open to panels to select whichever forms of assessment that best enable them to identify the most able applicant for the role. For example, if the panel considers it suitable, it is perfectly acceptable to ask for a simple note of interest from all applicants and interview everyone who submits one.

PLEASE NOTE THAT the entire process is used to identify the most able candidate(s). The initial application stage is not a mini-competition or hurdle that people have to get over and the appointment process doesn’t reset just prior to interview. Panels should take account of all of the information and evidence that applicants have provided over the course of an appointment process in order to reach their assessment decisions.

For each and every assessment method that the panel chooses there should be three stages involved in the assessment. These are:

1. Data Gathering (e.g. applicant makes written application, a panel takes notes during interview or other practical exercise and so on).

2. Evaluation (e.g. panel members evaluate the quantity and relevancy of the information provided, and where possible and necessary, such as in an interview situation, requests further/more relevant information through probing).

3. Decision Making (Panel discussions and comparison to make group decisions about the applicant(s) who most closely meet the criteria for selection on the basis of the information provided).

These activities should not be carried out simultaneously. This is because behavioural science has shown that the increased cognitive load implicit in that activity is more likely to lead to decisions made on the basis of factors other than the evidence i.e. biases.

Allowing plenty of time for assessment improves on decision making. By way of example, panel members should not be decision making about suitability during interviews and the extent of their evaluations during interview should be limited to whether or not their questions are generating the quality and amount of information they need (as otherwise they wouldn’t know whether further probing was appropriate).

When considering the form of assessment to use, it may help to consider the results of previous applicant surveys in order to gauge applicant views about them.

This would usually take the form of a written application in some form. Examples include one or more of the following: traditional application form (with or without a word limit to provide evidence against the criteria), CV with covering letter, tailored career/life history, overarching statement. Panels should be cautious about gathering information, such as CVs, which is not directly relevant to the role in question. Behavioural science has shown that this does lead to the introduction of new requirements, often unconsciously. More information on the potential drawbacks of assessment using CVs is available on the Applied website.

Other potential options which are not used as widely include initial telephone interviews or video applications. These less used formats each come with some additional level of complexity which would need to be considered. (e.g. how applicants can access the technology required if they do not already have it, how the panel can access the applications, whether the application would be solely this method or alongside a written application – if solely telephone or video application, how contact details and monitoring data would be collected.

Panels should consider whether to make initial assessment anonymous or not. Doing so will allow the panel to focus solely on the evidence against the criteria. However, in some rounds (particularly where a small pool of potential applicants is involved) it is possible that applicants will be recognisable anyway and therefore anonymity will have no effect.

Some pointers for assessing initial applications

There are various forms of interview which the panel can choose from. The Code is not at all prescriptive about which type of interview panels should use. It simply expects the assessment method to be appropriate to what is being assessed. Any combination of the interview types below, or others not referred to, will be legitimate if they achieve this aim.

Some Pointers for Interviews

Providing views about applicants. Each panel member should draw his or her own conclusions about the evidence presented and write down his or her reasons for those conclusions first. The panel chair should then ask each panel member to give their independent view before the panel reaches its collective conclusion. The role of “first person to offer a view” should be rotated throughout the day. The collective conclusion is the one used as the record of the assessment and included in the applicant summary. The summary is the agreed conclusion of the panel and cannot include references to individual dissent on the part of panel members. For the same reason, individual panel member’s notes should be disposed of once the panel has agreed the content of the applicant summary.

Applied recommends that questions should be designed to reflect job simulations. More information is available on their website. Some of the more common techniques currently in use are set out below.

Situation, Task, Action, Result, (Reflection) (S.T.A.R.(R)) / Competency based assessment

This is the technique that is most commonly adopted in the public sector. It is also known as competency based assessment. Its success, as with other similar techniques, is predicated on the panel knowing in advance what good evidence will look like. This in turn relies on the design of clear criteria for selection and associated indicators (as explained in the "defining what needs to be assessed" section above).

In this technique, candidates are expected to describe a situation (S), the task that they were required to perform (T), the action that they took (A) and the result (R). In some cases, candidates are also asked to reflect (R) on the situation. What, in hindsight, might they have done differently?

Benefits:

Potential drawbacks:

It should be noted also that this type of interview is unlikely to be sufficient for assessing certain personal qualities in any depth and that it should always therefore be considered as one option to be used alongside others to generate the necessary evidence. By way of example, using simulations to test skills is likely to be more equitable for people, regardless of their age and experience.

Started, Contribution, Amount, Result (S.C.A.R.)

This technique is similar to the STAR(R) technique and is used to assess the extent to which someone has taken ownership of an issue and initiated a particular course of action in order to improve a situation. It can be particularly effective at identifying people who have change management skills.

Performance based interviewing (PBI)

Also known as “the one question” interview, this technique is more discursive and can allow the conversation to flow more naturally and freely. As a consequence, it may be less susceptible to rehearsed responses and/or sectoral background bias whilst still generating the evidence sought. It also allows for hypothetical questions to be asked and related back to the previous behaviours. This can be very important when appointing people to posts that require the successful candidate to have a clear vision and plan for an organisation’s future in the short, medium or longer term. The following is not an exhaustive list of potential questions but does demonstrate that questioning in this way can generate evidence that criteria for a role are met without referring specifically to the criteria themselves. Here’s an example of how it works in practice (with areas potentially being tested in brackets):

Strengths based interviews (S.B.I)

Whereas traditional competency-based interviews aim to assess what a candidate can do, a strengths-based interview looks at what they enjoy doing and have a natural aptitude for. The approach is predicated on the understanding that people will be more motivated to fulfil roles effectively when the activities that they will be required to perform are a match for what they enjoy doing. These interviews therefore seek to identify what energises and motivates the candidate.

Questions could include: what kind of situations do you excel in? What tasks do you find most enjoyable? Can you describe in detail an example of where you feel you performed your best in the role?

This approach will be unfamiliar to many and therefore does have the advantage of mitigating against the possibility of candidates providing pat answers. A drawback of using this technique in isolation from competency based assessment is that the relevance and predictive validity may be limited.

As explained above, each type of interview generally involves a variety of questioning techniques to elicit information. Some of the most common techniques are set out below.

Probing or Reflective Questions

Probing questions encourage the candidate to provide more information and to expand on answers already provided. They also allow interviewers to request more specific answers when initial responses are vague, limited in detail, confusing or unclear.

Examples

Reflective questions encourage candidates to add to answers they have already given. They can also be used to link an earlier answer to a new question. They are helpful at triggering memories and assisting with recall (see cognitive questioning below). They also demonstrate that the interviewers are listening to what the candidate is saying and that they have taken account of the earlier stages of assessment such as an initial application.

Examples

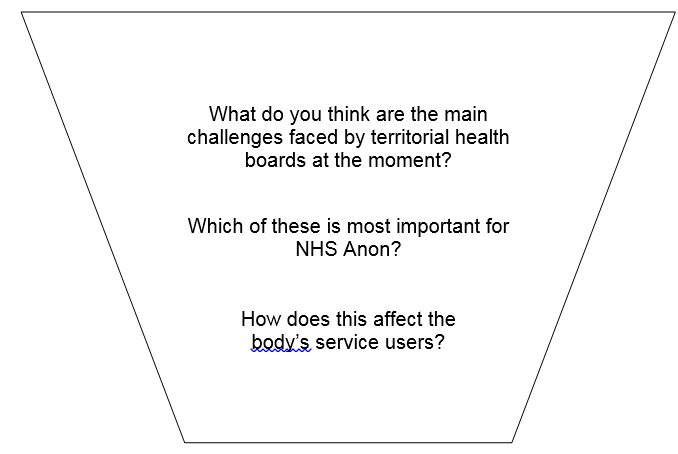

The Question Funnel

This technique assesses whether a candidate has the ability to recognise a wider or strategic context (the big picture) and then make reasoned judgments about specific aspects of it.

Question funnel questions – examples

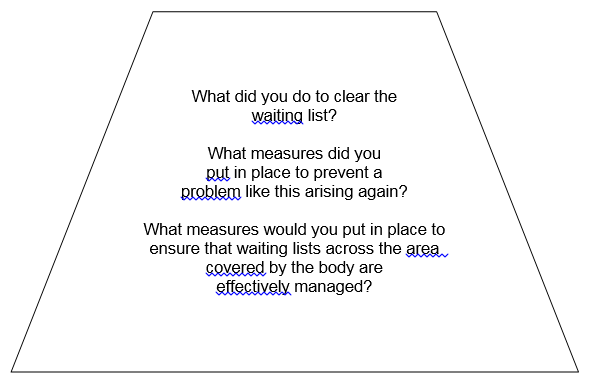

The Inverted Question Funnel

This technique can be helpful in aiding a more nervous candidate to open up.

Both funnel techniques can give rise to purely hypothetical answers which may not be good indicators of ability in the role but they can be useful for establishing, in particular, strategic thinking.

Inverted funnel questions – examples

Cognitive questioning

Because of the way memories are encoded and stored, memory recall is effectively a reconstruction of elements scattered throughout various areas of our brains. Memories are not stored in our brains like books on library shelves, or even as a collection of self-contained recordings or pictures or video clips, but may be better thought of as a kind of collage or a jigsaw puzzle, involving different elements stored in disparate parts of the brain linked together by associations and neural networks.

Memory retrieval therefore requires re-visiting the nerve pathways the brain formed when encoding the memory, and the strength of those pathways determines how quickly the memory can be recalled. Recall effectively returns a memory from long-term storage to short-term or working memory, where it can be accessed, in a kind of mirror image of the encoding process. It is then re-stored back in long-term memory, thus re-consolidating and strengthening it.

This technique is a way of stimulating the different pathways involved in recall by using different cues.

When candidates appear to be giving pat or rehearsed responses to questions, or seem unable to recall what they have done, this technique helps to surface more accurate evidence. The technique can help to identify whether people’s initial responses accurately reflect what they did. For example:

The technique should not be used all of the time but only in these situations where a candidate is struggling to recall or where the response lacks coherence. It should not be used in a challenging way in order to “catch out” candidates but rather as a way of ensuring that the panel has a complete picture.

It works by stimulating disparate parts of the brain where our memories are stored diffusely. It enhances recall and tackles exaggeration. It can be contrasted with more traditional questioning in a few ways:

Traditional questioning | Cognitive questioning |

|

|

There are four different areas or “cues” that the interviewer can use individually or in combination to elicit the information sought. These are:

Personal context includes things like how the interviewee felt about a given situation. It can also include questions on the implications of course of action for the interviewee and others.

Example questions:

Different perspectives includes questions on how others felt about or were affected in the situation being recalled.

Different order means mixing up the order of events that you are asking your interviewee about.

Associations means asking questions about other things that were going on at the time of the events under exploration.

Whilst questions in all of these areas are not necessarily directly relevant to what is being assessed, it is legitimate to use them if it elicits the evidence that the panel is looking for.

Some examples of simulations or practical exercises which have been used include:

Presentation – applicants are asked to make a presentation to the panel on a specific subject. This may or may not include the option to use powerpoint/visual prompts. Caution should be used about this method as it is sometimes adopted as standard when in fact there are no criteria for selection related to making presentations (see validity above).

Prepared response – similar to a presentation but more relaxed. Applicants are asked to speak on a topic to the panel who may or may not follow this with questions.

Board paper exercise – applicants are asked to consider a board paper and answer a question/or questions related to the content. Applicants might be sent the board paper in advance or asked to attend the assessment early enough to have time to be presented with, and consider it on the day.

Psychometric testing – this usually involves a set of on-line tests used to measure individuals' mental capabilities and behavioural style. Psychometric tests are designed to measure candidates' suitability for a role based on the required personality characteristics and aptitude (or cognitive abilities). They will usually involve a panel being provided with a report from a psychologist who has examined the applicant’s results and may suggest areas for further questioning at interview.

Role play – this involves simulating a real life situation, usually with someone specifically trained to undertake the role required. The panel will observe and assess the applicant’s response to the situation presented by the professional.

When using simulations or practical exercises it is important that panels carefully consider the combination of validity and assessment (see below).

It is also important that panels explain in advance how they plan to assess candidates and why. This approach is transparent and provides public assurance about the way in which the process is being conducted.

The process should be designed to find the most able board members and not the most effective at completing forms and/or performing at interview. Panels should be clear about what they are testing and how they are testing it. For example, experience and ability are different things and should be assessed in different ways.

The selection panel will usually test skills by using competency-based questioning at interview or in a written application. In either case applicants will be asked to provide examples of having put their skills to use in previous situations. The panel may also use an assessment centre approach to test certain skills such as team working and/or communications. Panels may also set specific tasks such as asking applicants to review a board paper to assess skills such as analysis and judgment or to make a presentation to assess communication and presentation skills.

The panel will establish not just whether applicants have used a given skill but how effective they are at putting it into practice.

The panel will not take into account whether applicants have applied their knowledge in practical circumstances unless it is clear from the person specification that practical application is important. The use of wording such as “a working knowledge” means that the panel will look for evidence of applicants having applied their knowledge to practical situations by asking them to provide examples of having done so.

The panel will usually test knowledge by questioning applicants’ understanding of the subject area. The panel may also set a test or exam either online or as part of an assessment centre exercise. Applicants will be advised of the assessment methods being used in the application pack. The panel will establish not just whether they have the knowledge but how in-depth it is. The panel will identify the applicants who are most knowledgeable in the subject area. In some cases, although rarely, the role may require a qualification. If so, this will always be made explicit in the person specification as will clarity on whether it has to be at a certain level. Verification in this case will usually be by asking applicants to confirm by way of a tick box or similar that they have the qualification. This can then be checked with the awarding body.

Where experience is sought, the panel will usually include a section entitled “Life History” in the application form, or ask applicants to provide a tailored CV and/or a letter. In all cases applicants will be asked to set out the roles they have held or the activities that they have engaged in that are relevant to the experience described in the person specification.

The person specification can also give guidance on the type of backgrounds or positions that the experience might have been gained in. Experience does not have to have been gained in a professional capacity. Experience gained in the applicants’ personal life and from any voluntary work they have done is equally valid. In some cases, the experience sought may be something very personal to potential applicants such as direct experience of social exclusion or first-hand experience of the accessibility issues that affect public-service users with a disability.

The panel will compare what applicants have written against the type of experience it is looking for to see which applicants provide the closest match. The panel may ask follow up questions at interview to see how effective applicants have been in the roles they have held. If this is planned it will be made clear in the person specification.

The NHS in Scotland has introduced a version of values based recruitment for all new chair and board member posts. This is currently a hybrid form of the appointments process as it uses the core skills framework to describe the skills, knowledge and experience sought and a narrative explanation of the values sought with an explanation for applicants that their behaviours should be aligned with them. Here is an extract from a recent pack:

The values that are shared across NHSScotland are outlined in the Everyone Matters: 2020 Workforce Vision. These are:

| Embedding these values in everything we do. In practice this means:

|

The values are usually always assessed using psychometric tests and simulated activities, as well as from written application and interviews. As with all other regulated appointments, the appointment pack will continue to be clear about the assessment methods that will be used.

The Commissioner anticipates that other appointing ministers may seek to include values as essential personal qualities for future appointments that they make. It is also anticipated that the core skills framework will be further adapted to include appropriate indicators against the relevant criteria for selection such that both panels and applicants are fully aware of the types of behaviours that are being sought.

There are references to a number of ways in which panels are able to mitigate bias throughout the process detailed in this document. By way of reminder, they are also referenced below:

In addition, there is a Mitigating Bias Crib sheet which will be useful for panels to consider.

Following the final stage of assessment, the selection panel will have a lot of information available about each applicant as a result of their initial application and the assessments made at interview and/or selection exercise(s) used. The job of the panel is then to assess all the information to identify whether they meet the criteria for selection and associated indicators. They will then need to make a record of the group decisions and reasoning. This record will form the basis of the minister’s decision, as well as providing feedback to applicants and supporting evidence that decisions were appropriate in the case of any complaint made. The role of the chair of the panel is vital at this stage. They are tasked with summing up the panel’s agreed view on how each applicant and candidate did or did not meet the criteria for selection.

Particular care must be taken over the contents of the applicant summary. It should include contextual information provided by applicants where this is relevant to the criteria for selection. It should not include reference to apparent new requirements and, as should be clear from the foregoing, new requirements should not in any case have featured in the assessment of applicants.

For example

General knowledge of employment law is required in the person specification. The applicant summary notes that the applicant had general knowledge but not detailed knowledge relevant to the work of the body. This is fed back to the (unsuccessful) applicant. The applicant may conclude that they have been ruled out for reasons not related to the published requirements. The applicant may also conclude that they have wasted their time and effort in applying. Examples of good and poor practice in recording applicant summaries follow.

In this section you’ll find examples of applicant summary content that, depending on the context and criterion being assessed, will or won’t comply with the provisions of the Code.

Criterion – the ability to challenge constructively within a team or committee setting

Compliant:

“Ms X provided an excellent example in her application of challenging in the context of her role as a board member of the Inversnecky Housing Association. She described how she challenged the perception of newer members that they would have a day to day role in the running of the organisation rather than overseeing and monitoring its strategic direction; at interview she explained how she did this in a constructive, engaging and facilitative way, offering to provide information and material at a future meeting in order to ensure all members had greater clarity on their role. Ms X provided a second example… The panel concluded that Ms X was highly skilled at challenging constructively within a team or committee setting”

Non-compliant (see highlights):

“Ms Y is a chartered accountant with PWC. She has held a mid-management role in the company for seventeen years although she had a four year break during that period. She came across as quite nervous at interview but nevertheless gave a reasonable example of challenging constructively during a staff meeting but it was from some time ago and not at the level of seniority that the body requires to be an effective board member as it was not at board level. She also didn’t appear to understand the differences between the role of the executive and non-executive and the panel concluded that this would mean she would find it difficult to operate effectively as a challenging board member.“

Please remember that whether or not an applicant summary’s contents are compliant is context-driven.

By way of example, if the criterion for selection relates to experience then a list of standalone statements about roles held which demonstrate that an applicant has relevant experience is compliant:

Criterion - Experience of the Scottish Criminal Justice System

“Mr Z is a practising Advocate, working on criminal cases. He has judicial experience as one of the Judges of the Courts of Appeal of Inversnecky since 2005.”

Knowledge can also sometimes be inferred from positions held and in such cases it is again perfectly legitimate to list relevant positions.

Criterion - Knowledge of the Scottish Criminal Justice System

“Professor Z is Emeritus Professor of Prison Studies in the University of Inversnecky. He was the founding Director of the Scottish Centre for Incarceration Studies (2002-2008) and a former prison governor. Professor Z has a PhD from the University of Aberdon in criminology.”